As organizations increasingly adopt various generative AI solutions, the main obstacles are no longer the capabilities of the technology itself, but concerns related to data security, data quality, privacy, and lack of control. Especially in agent-based systems, the core challenge is not what language models are capable of doing, but what their operation is ultimately anchored to.

Headai approaches this challenge as an architectural question: trustworthy agentic AI does not emerge from individual models or frameworks, but from a holistic architecture where sovereignty, auditability, and data governance are foundational principles rather than features added afterward.

This document outlines the core pillars of an agentic architecture for systems where both the overall system and its individual components are trustworthy by design. The approach is based on three core principles that together enable a sovereign and auditable outcome:

1. Complete architectural control

The goal is not to provide a general-purpose framework, but a purpose-built system of directed intelligence designed to deliver reliable and in-depth analysis regardless of the production environment. This applies equally to fully local deployments and private cloud environments, ensuring that data never leaves the organization’s control.

2. Language-model independence

The architecture must support locally run and open-source language models, enabling full data privacy and eliminating structural dependency on individual vendors.

3. Transparent and auditable logic

Achieving trustworthiness requires schema-driven RAG processes that deliberately decompose source data into fine-grained, semantically addressable units, enabling the construction of knowledge graphs and corresponding semantic concept networks, and enforcing predefined decision rules. This removes the mystique from AI behavior and avoids “black box” thinking: every significant conclusion can be justified and traced.

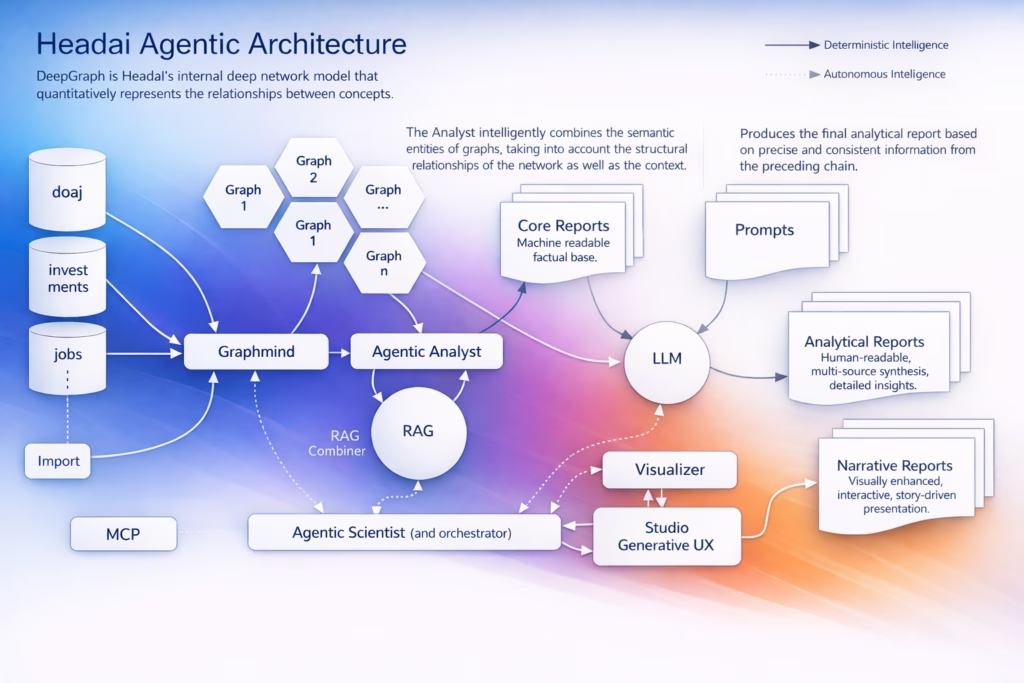

The result of these principles is Data-Anchored Intelligence: an agentic AI architecture in which the generative capabilities of language models are deliberately anchored to high-quality, up-to-date, and auditable knowledge graphs (semantic concept networks).

The key difference from conventional solutions lies in graph-based RAG modeling, where the core of the analysis is semantic concept network technology that identifies and models relationships and meanings between concepts. Agent “intelligence” emerges from this deep understanding of data before a language model even enters the process. This ensures that agent conclusions are based on explicit and genuine data analysis rather than linguistic probability alone.

Such an approach enables organizations to fully leverage conversational AI without compromising on security, reproducibility, or privacy.

Example diagram: Guided data sovereignty in modern AI-based systems

Architectural pillars

To realize the architecture outlined above, the system must be built on four mutually reinforcing pillars that together ensure trustworthiness, performance, and strategic value.

1. Multi-agent architecture: an intelligent agent team

It is necessary to move from traditional monolithic models toward dynamic multi-agent systems. In this model, each agent (Scientist, Analyst, Reporter, Studio) has a clearly defined area of expertise and responsibility within the information processing chain. This decomposition not only enables parallel execution, but also significantly simplifies maintenance, testing, and incremental system expansion. New capabilities are introduced by creating new specialized agents, not by modifying a complex core.

2. Advanced and modular RAG: knowledge-graph-based understanding

At the heart of the architecture is an advanced and modular Retrieval-Augmented Generation (RAG) process that goes beyond the limitations of traditional semantic search. Instead of treating information as isolated documents, the system dynamically builds, decomposes, and analyzes knowledge graphs (semantic concept networks) that model dependencies, causal relationships, and meanings between concepts. Each stage of the process, from building future projections (Signals) to recommendation analysis (Compass), is an independent, schema-driven module, ensuring flexibility and scalability.

3. Transparent and rule-based decision-making: auditable logic

One of the main barriers to adopting AI systems is their perceived “black box” nature. This can be addressed by implementing a rule-based and transparent decision-making model, where agent behaviour is governed by clear, human-readable rules, intent models, and operational logic (e.g., decisions, methods, intentions, datasets, horizons, semantics). This ensures that every decision and its rationale are traceable and auditable, which is essential in regulated industries and critical business processes.

4. Production readiness and enterprise-grade implementation

The architecture must not remain an academic concept, but instead be a production-ready, purpose-built system designed to deliver reliable and in-depth analysis:

- Schema-driven data management: all data transfer and processing are based on validated schemas, ensuring integrity and compatibility.

- Vendor independence: the architecture is model-agnostic. Selecting or changing a model at the level of an individual agent must not require rebuilding the entire system.

- Automated deployment: the system must support fully automated deployment across different environments.

- Comprehensive lifecycle management: data collection, processing, cleansing, and enrichment must be considered as part of the same end-to-end lifecycle.

This holistic approach ensures long-term reliability, maintainability, and low total cost of ownership (TCO).

Conclusions

Trustworthy AI does not emerge from scaling language models, but from anchoring reasoning in real, governed data. Data-Anchored Intelligence is a way to build agentic systems where sovereignty and auditability are structural properties.

Modern language models make it possible for almost anyone to generate nearly any kind of analysis or content. However, this freedom also introduces risk: without sovereignty, language models operate without guarantees of data quality, freshness, or verifiability. In Headai’s approach, sovereignty is implemented as a deliberate control point where the generative capabilities of language models are anchored to high-quality, up-to-date, and externally governed data. The result is not merely a plausible text generator, but an auditable expert system. Headai’s unique graph-based RAG process ensures that agent conclusions are grounded in deep data analysis and semantic relationships rather than superficial document retrieval, making the system a reliable choice for critical business processes.

Headai develops such architectures for demanding analytical and decision-making environments.

Contact us to discuss how this approach could be applied in your organization.

Blog post by Marko Laiho, Headai